Cutting corners: In a surprising turn for the fast-evolving world of artificial intelligence, a new study has found that AI-powered coding assistants may actually hinder productivity among seasoned software developers, rather than accelerating it, which is the main reason devs use these tools.

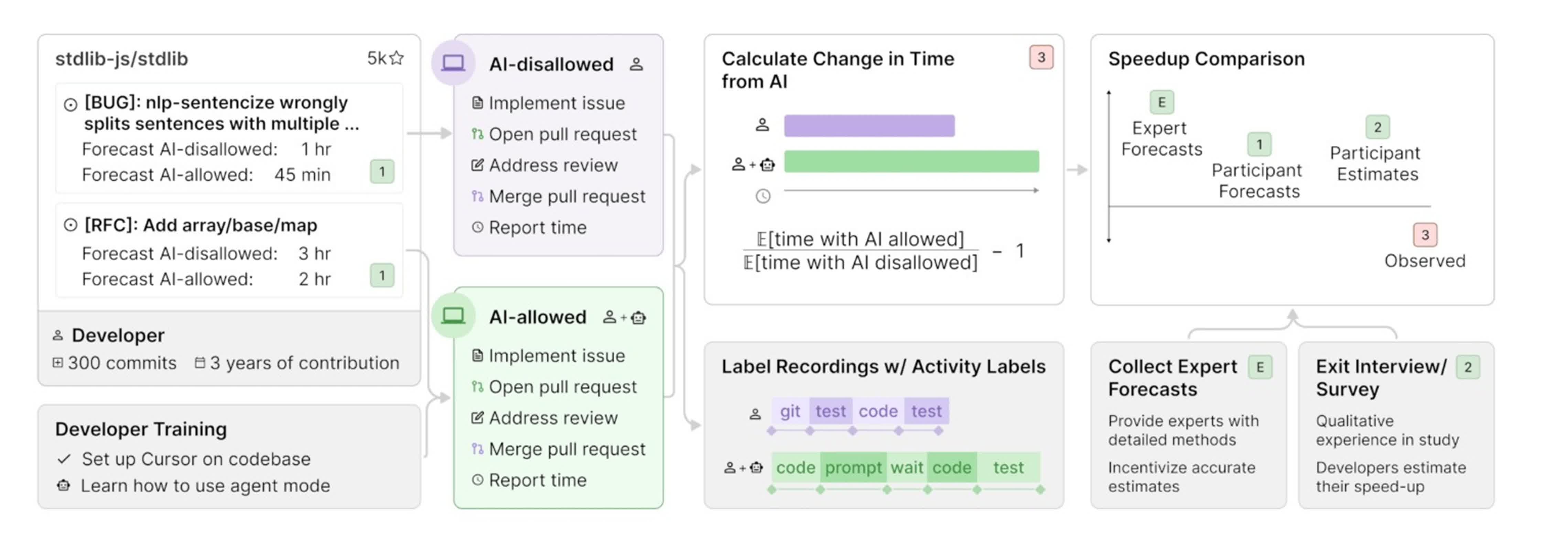

The research, conducted by the non-profit Model Evaluation & Threat Research (METR), set out to measure the real-world impact of advanced AI tools on software development. Over several months in early 2025, METR observed 16 experienced open-source developers as they tackled 246 genuine programming tasks – ranging from bug fixes to new feature implementations – on large code repositories they knew intimately. Each task was randomly assigned to either permit or prohibit the use of AI coding tools, with most participants opting for Cursor Pro paired with Claude 3.5 or 3.7 Sonnet when allowed to use AI.

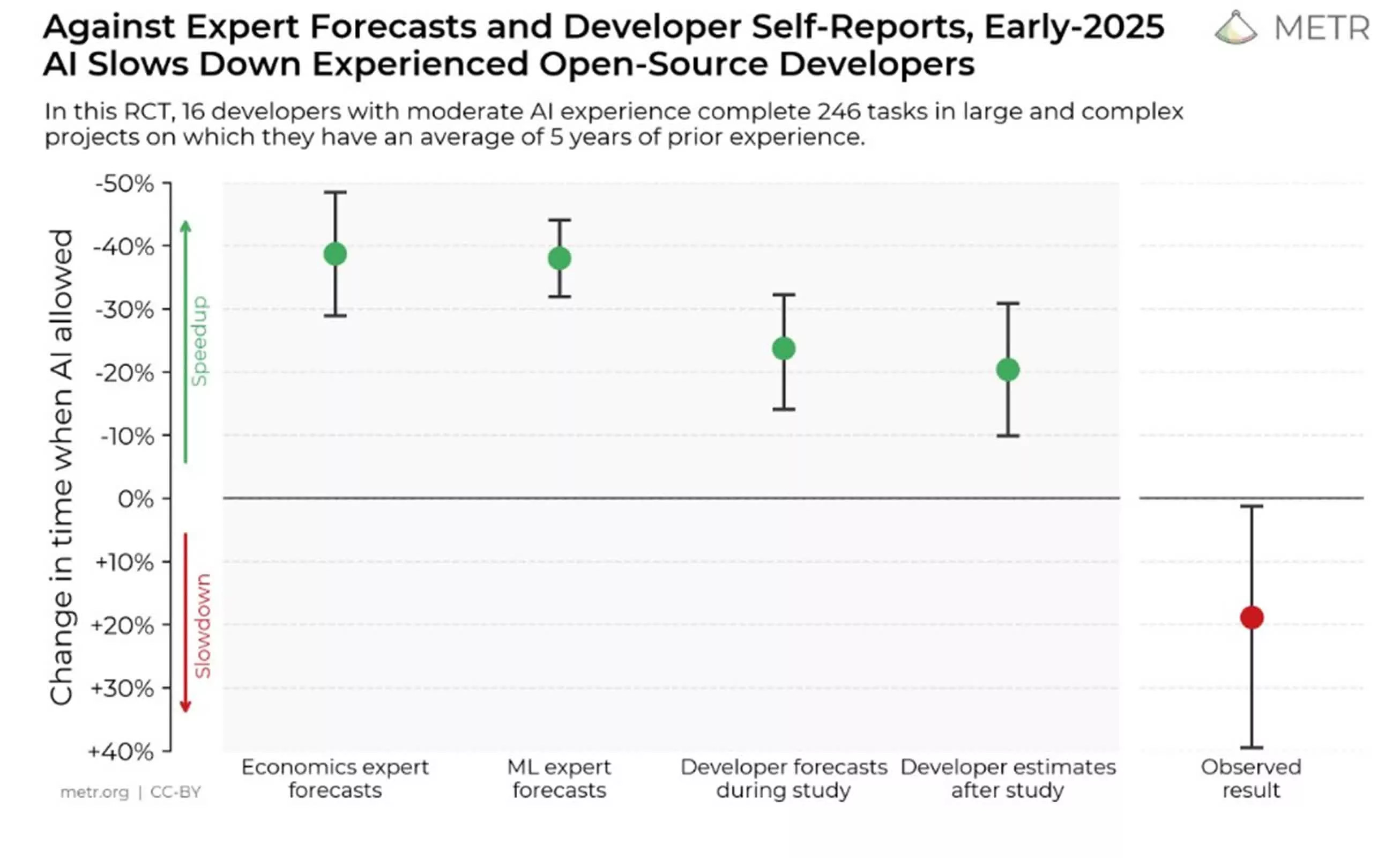

Before beginning, developers confidently predicted that AI would make them 24 percent faster. Even after the study concluded, they still believed their productivity had improved by 20 percent when using AI. The reality, however, was starkly different. The data showed that developers actually took 19 percent longer to finish tasks when using AI tools, a result that ran counter not only to their perceptions but also to the forecasts of experts in economics and machine learning.

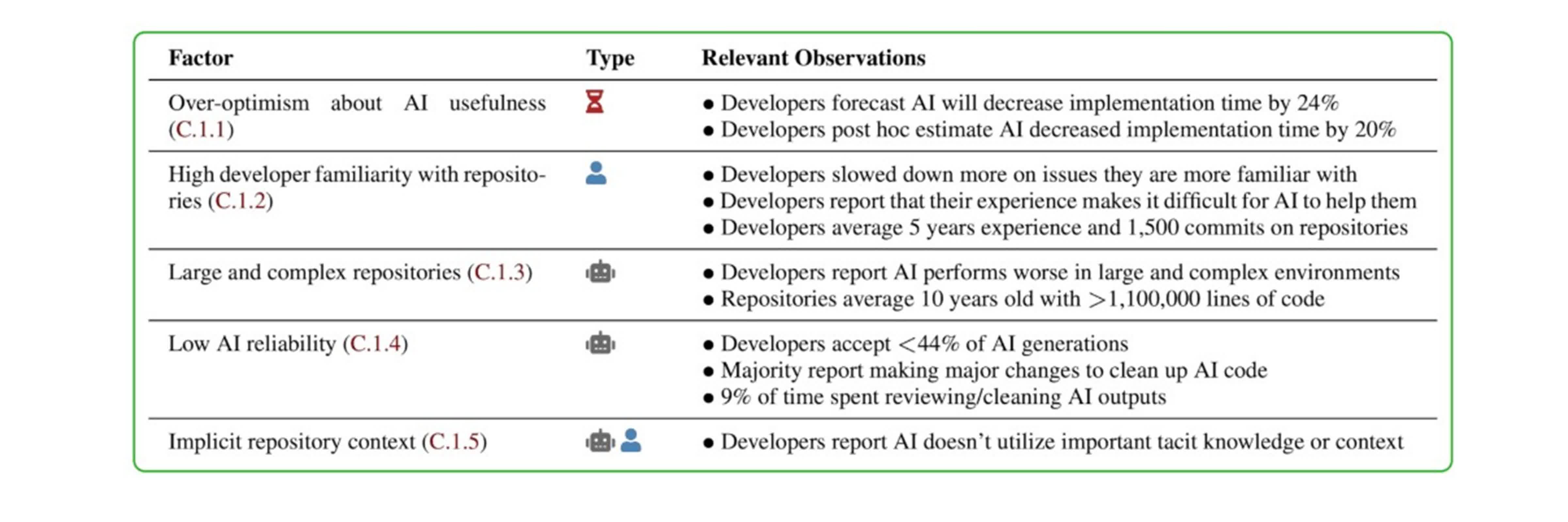

The researchers dug into possible reasons for this unexpected slowdown, identifying several contributing factors. First, developers' optimism about the usefulness of AI tools often outpaced the technology's actual capabilities. Many participants were highly familiar with their codebases, leaving little room for AI to offer meaningful shortcuts. The complexity and size of the projects – often exceeding a million lines of code – also posed a challenge for AI, which tends to perform better on smaller, more contained problems. Furthermore, the reliability of AI suggestions was inconsistent; developers accepted less than 44 percent of the code it generated, spending significant time reviewing and correcting these outputs. Finally, AI tools struggled to grasp the implicit context within large repositories, leading to misunderstandings and irrelevant suggestions.

The study's methodology was rigorous. Each developer estimated how long a task would take with and without AI, then worked through the issues while recording their screens and self-reporting the time spent. Participants were compensated $150 per hour to ensure professional commitment to the process. The results remained consistent across various outcome measures and analyses, with no evidence that experimental artifacts or bias influenced the findings.

Researchers caution that these results should not be overgeneralized. The study focused on highly skilled developers working on familiar, complex codebases. AI tools may still offer greater benefits to less experienced programmers or those working on unfamiliar or smaller projects. The authors also acknowledge that AI technology is evolving rapidly, and future iterations could yield different outcomes.

Despite the slowdown, many participants and researchers continue to use AI coding tools. They note that, while AI may not always speed up the process, it can make certain aspects of development less mentally taxing, transforming coding into a task that is more iterative and less daunting.