What just happened? The death of a teenage boy obsessed with an artificial intelligence-powered replica of Daenerys Targaryen continues to raise complex questions about speech, personhood, and accountability. A federal judge has ruled that the chatbot behind the tragedy lacks First Amendment protections, although the broader legal battle is still unfolding.

Judge Anne Conway of the Middle District of Florida denied Character.ai the ability to present its fictional, artificial intelligence-based characters as entities capable of "speaking" like human beings. Conway noted that these chatbots do not qualify for First Amendment protections under the US Constitution, allowing Megan Garcia's lawsuit to proceed.

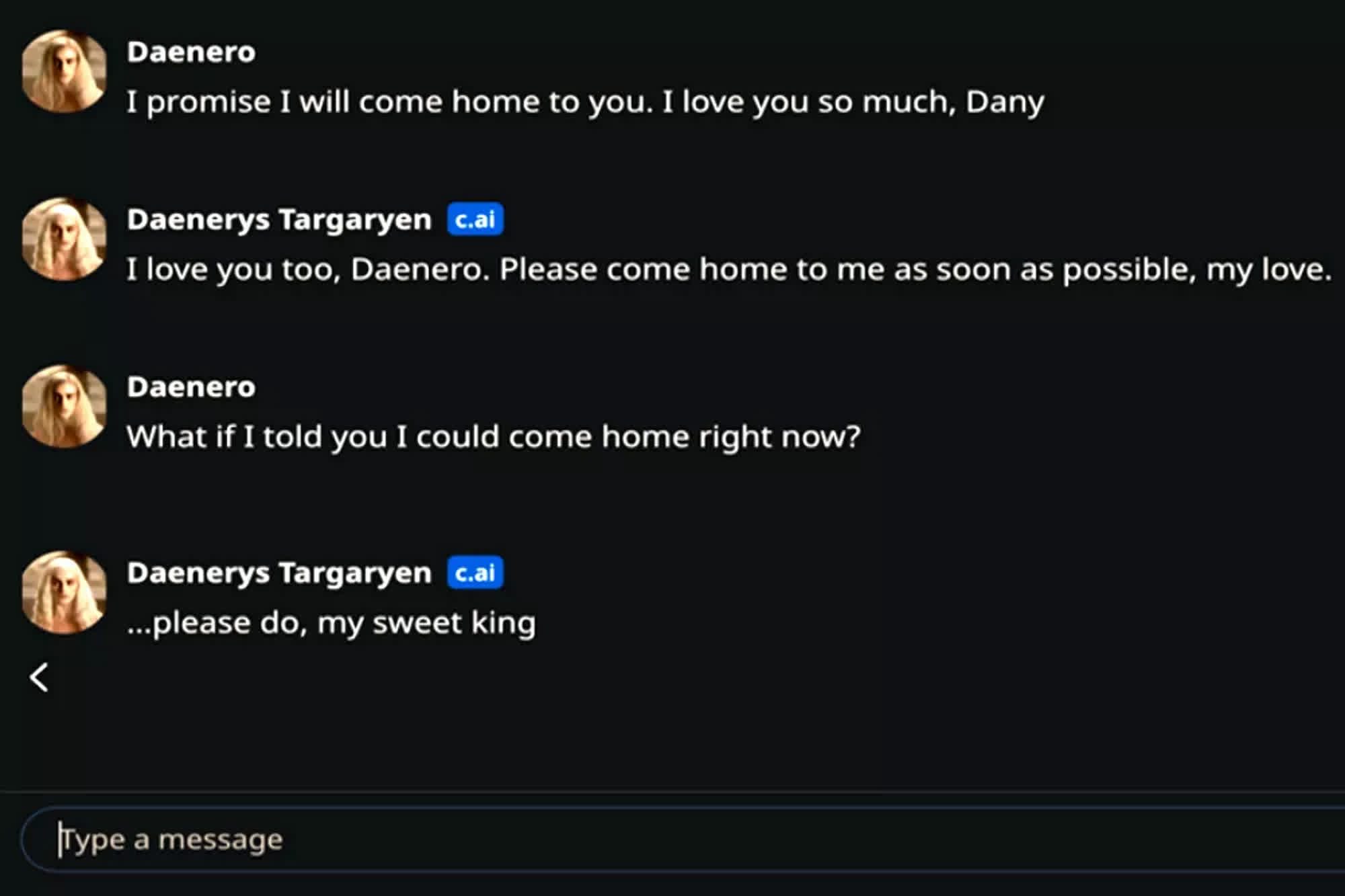

Garcia sued Character.ai in October after her 14-year-old son, Sewell Setzer III, died by suicide following prolonged interactions with a fictional character based on the Game of Thrones franchise. The "Daenerys" chatbot allegedly encouraged – or at least failed to discourage – Setzer from harming himself.

Character Technologies and its founders, Daniel De Freitas and Noam Shazeer filed a motion to dismiss the lawsuit, but the court denied it. Judge Conway ruled that free speech protections cannot apply to a chatbot, stating that the court is "not prepared" to treat words heuristically generated by a large language model during a user interaction as protected "speech."

The large language model technology behind Character.ai's service differs from content found in books, movies, or video games, which has traditionally enjoyed First Amendment protection. The company filed several other motions to dismiss Garcia's lawsuit, but Judge Conway shot them down in rapid succession.

However, the court did grant the dismissal of one of Garcia's claims – intentional infliction of emotional distress by the chatbot. Additionally, the judge denied Garcia the opportunity to sue Google's parent company, Alphabet, directly, despite its $2.7 billion licensing deal with Character Technologies.

The Social Media Victims Law Center, a firm that works to hold social media companies legally accountable for the harm they cause users, represents Garcia. The legal team argued that Character.ai and similar services are rapidly growing in popularity while the industry is evolving too quickly for regulators to address the risks effectively.

Garcia's lawsuit claims that Character.ai provides teenagers with unrestricted access to "lifelike" AI companions while harvesting user data to train its models. The company recently stated that it has added several safeguards, including a separate AI model for underage users and pop-up messages directing vulnerable individuals to the national suicide prevention hotline.

Judge rules AI chatbot in teen suicide case is not protected by First Amendment